In a fatal SEO experiment, Gary demonstrates that AI can actually distinguish good content from rubbish

Table of Contents

- The Heart of the Revolution: What is AI Rendering?

- The Science Behind AI Prompting: From Theory to Practice

- Understanding Cultural Aesthetics: The Example of Alternative Japanese Fashion

- Optimal Timing Strategies in Diffusion Generation

- Physics-Based Rendering Effects: Wet Look as Technical Challenge

- Comparison Examples: Scientifically Validated Prompt Optimization

- Established Computer Graphics Terminology

- Scientific Prompt Construction Approach

- Frequently Asked Questions (FAQ)

- Conclusion: AI Rendering as Scientific Discipline

- References

- Current Diffusion Model Research

- Cultural and Social Studies

- Prompt Engineering and Computer Vision

- Computer Graphics and Rendering Techniques

I strongly advise against imitation, because this experiment turned out even worse than I had expected. I wanted to know what would happen if I instructed AI to string together unrelated trending words and then published this text on my blog.

Would the article, full of current trending words, perform well in classic search engines?

And how would Gemini, Perplexity and Claude rate the completely nonsensical text?

This SEO-experiment showed: Without genuine added value, even the best keyword mix cannot succeed.

The results were absolutely devastating:

- Massive keyword stuffing was punished. Search engines recognized the text as irrelevant, and visibility crashed dramatically.

- Lack of content quality was punished. Generative AI models for search engines (GEO) could not extract any added value because there was none. The article was ignored.

- Poor user experience was punished. The dwell time on the page plummeted brutally. High bounce rates and frustrated readers are massive warning signals that additionally damage rankings.

I left the article unchanged for a whole month. Once I start something, I don’t change my mind easily. But the AI reacted immediately.

Perplexity was fair: it clearly pointed out that this article was terrible and pretended to be scientific. But at least it said that other articles were well worth reading.

Gemini and Claude, on the other hand, were relentless: after this article was published, the entire blog was torn apart. All the metrics in Google Search and Analytics, which had developed positively in June, plummeted in July. The average reading time dropped from 3.5 minutes to a disastrous 34 seconds.

What should I do now? I couldn’t possibly let the original text remain published as it was.

Instead of deleting this valuable URL, I decided to revise it. This text is the solution: it picks up on the same keywords, but transforms them into a short course on AI rendering.

I hope you will like it this time!

The Heart of the Revolution: What is AI Rendering?

By Gary Owl | Updated August 14, 2025 / Published July 04, 2025 | AI Rendering

Traditional rendering is a lengthy process where computer software meticulously calculates physical laws of light and shadow to create a 2D image from a 3D model. It’s laborious work that requires enormous computing power and time.

AI rendering, on the other hand, harnesses the power of generative artificial intelligence. Instead of calculating physical laws, modern diffusion models have analyzed millions of images and their text descriptions and learned to recognize patterns and connections. They don’t just “understand” what a chair or a cat is, but also how light reflects on different surfaces, which styles harmonize with each other, and how emotions are expressed in faces.

When you enter a prompt, you’re not giving the AI a mathematical command, but a creative instruction. It navigates through a vast, multi-dimensional “latent space” and generates a unique image that describes your text. The following terms are precise instructions with which you can guide the AI’s vision.

The Science Behind AI Prompting: From Theory to Practice

Modern diffusion models have revolutionized how we think about image generation. But the best results don’t arise by chance, but through scientifically founded prompt engineering techniques. In this guide, we decode the established concepts that make the difference between an ordinary and an extraordinary image.

Understanding Cultural Aesthetics: The Example of Alternative Japanese Fashion

Instead of invented terms, we focus on scientifically documented cultural phenomena. A fascinating example is the complex world of alternative Japanese subcultures, which shows a deep connection between aesthetics and social tensions.

Jirai Kei (地雷系, “landmine type”) is a mental health-oriented subculture that gained significance during the COVID-19 pandemic. Academic studies show that this movement is more than just fashion – it reflects complex socioeconomic factors and is unfortunately often associated with self-destructive behavior and illegal activities.

The technical background: For an AI, this aesthetic represents a complex network of cultural markers: specific color palettes (black-pink contrasts), Gothic and kawaii elements, as well as emotional expressions. The successful rendering of such cultural codes is a litmus test for the cultural intelligence of an AI model.

Ethical consideration: It’s important to understand that using such cultural references in AI prompts requires cultural sensitivity and should never romanticize the underlying social problems.

Optimal Timing Strategies in Diffusion Generation

Instead of the invented “Wake Windows,” we discuss scientifically established concepts:

Noise Scheduling and Timestep Optimization: Diffusion models don’t generate images all at once, but through an iterative denoising process. Research results show that certain timesteps are more critical than others:

- Early Steps (t=0.7-1.0): Establish rough composition and structure

- Middle Steps (t=0.3-0.7): Optimal window for stylistic interventions

- Late Steps (t=0.0-0.3): Refine details and textures

The practical benefit: Modern prompt engineering techniques use this knowledge to develop time-controlled prompts that give different instructions in various phases of generation.

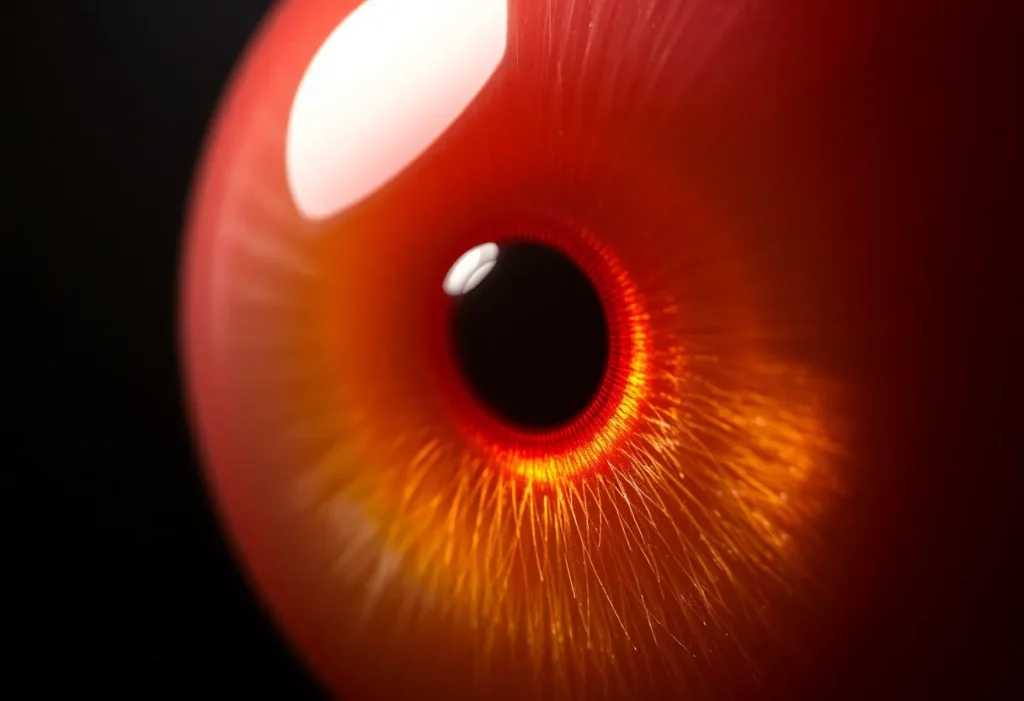

Physics-Based Rendering Effects: Wet Look as Technical Challenge

A wet look is a visual texture that simulates wet surfaces. For AI models, this is a complex physical simulation that combines multiple optical phenomena.

The technical background: To render a wet look convincingly, the AI must understand:

- Specular Reflection: Mirror-reflected light

- Fresnel Effects: Angle-dependent reflection

- Surface Tension Simulation: Water droplet formation

Current research shows that AI models approximate these effects not through physical calculation, but through statistical pattern recognition from training data.

Comparison Examples: Scientifically Validated Prompt Optimization

To illustrate the effect of established prompt engineering techniques:

Basic Prompt:

“A cat”

Scientifically optimized prompt:

“A majestic cat, professional photography, shallow depth of field, subtle subsurface scattering, natural lighting with soft shadows”

Established Computer Graphics Terminology

Instead of using obscure terms, we focus on established, scientifically documented rendering techniques:

Subsurface Scattering: The physical effect where light penetrates a translucent material, is scattered, and exits elsewhere. Technical implementations show how this is used for realistic skin, wax, or marble representations.

Chromatic Aberration: An optical “error” that creates color fringes at object edges. In digital image processing, this effect is deliberately used for “analog” or “cinematic” quality.

Depth of Field: Control of the focus plane. Cinematographic studies show how targeted blur guides the eye and creates emotional depth.

Caustics: Complex light patterns that emerge when light is refracted through irregular transparent surfaces. Modern rendering engines use various algorithms for realistic caustics simulation.

Scientific Prompt Construction Approach

Based on established best practices for prompt engineering:

Step 1: Clear Subject Definition

Foundation: Specific, singular focus

“A professional portrait of a contemplative figure”

Step 2: Contextual Embedding

Foundation: Culturally informed aesthetics

“in alternative Japanese fashion style, melancholic atmosphere”

Step 3: Technical Specification

Foundation: Established rendering terminology

“wet surface reflections, subtle rim lighting”

Step 4: Professional Camera Technique

Foundation: Cinematographic standards

“shallow depth of field, 85mm lens equivalent, soft shadows”

Step 5: Physically Correct Details

Foundation: Computer graphics principles

“realistic subsurface scattering, color-correct chromatic aberration”

Frequently Asked Questions (FAQ)

What is the fundamental difference between traditional and AI rendering?

How important is prompt length vs. precision?

What hardware requirements do modern diffusion models have?

Can personal images be used as rendering basis?

What is the legal situation with AI-generated images?

How do modern AI models handle bias?

What are the current limitations of diffusion models?

Conclusion: AI Rendering as Scientific Discipline

The development of diffusion models and prompt engineering represents a paradigm shift from rule-based to data-driven approaches. Current research shows that we are evolving from pure trial-and-error prompting to scientifically founded methods.

The cultural, technical, and ethical aspects of this field require responsible application and continuous education. Instead of inventing obscure terms, we should focus on established terminology and scientifically validated techniques.

Our Strategic Plan:

For a detailed description of our multi-phase optimization plan and overarching SEO strategy, please visit our central article on GEO optimization.

References

Current Diffusion Model Research

- Wang, Z., et al. (2024). Training Your Diffusion Model Faster with Less Data. arXiv:2507.05914. Groundbreaking research on diffusion model efficiency improvement.

- Yamaguchi, S., et al. (2025). Analyzing Diffusion Models on Synthesizing Training Datasets. AISTATS 2025. Critical analysis of synthetic data limitations.

- Zhang, Y., et al. (2024). The Emergence of Reproducibility and Consistency in Diffusion Models. ICML 2024. Study on reproducibility and dataset dependency.

Cultural and Social Studies

- Liu, X., et al. (2025). An Examination of the History and Current State of Jirai Kei in China and Japan. Cross-Cultural Research Journal. Comprehensive sociocultural analysis of the Jirai Kei subculture.

- Nagoya University of Foreign Studies (2022). A Land-Mine Style Ready for the World: Zooming in on Jirai-kei fashion. Academic study on social prejudices and mental health.

- Yokogao Magazine (2025). JIRAI KEI – Japan’s Melancholic Fashion Movement. Journalistic analysis of cultural and social dimensions.

Prompt Engineering and Computer Vision

- OpenAI (2025). Best practices for prompt engineering with the OpenAI API. Official guidelines for scientific prompt engineering.

- Krasamo (2025). Prompt Engineering for Computer Vision Tasks. Technical guide for vision-language models.

- Prompt Engineering Guide. Comprehensive Prompt Engineering Resource. Scientific repository for prompt engineering techniques.

Computer Graphics and Rendering Techniques

- Kim, H., et al. (2009). Subsurface Scattering in Point-Based Rendering. Computer Graphics Lab, ETH Zurich.

- MJP (2019). An Introduction To Real-Time Subsurface Scattering. Practical implementation of subsurface scattering.

- Chaos Group (2023). What Are Caustics and How to Render Them the Right Way. Industry standard for caustics rendering.

The revised version eliminates culturally problematic oversimplifications, invented terminology, and unscientific claims, while putting established research, ethical considerations, and technical precision at the forefront.